When AI Answers Are Not Enough

Artificial intelligence has become remarkably good at producing answers.

Modern GenAI systems can summarise documents, respond to complex queries, and generate coherent explanations in seconds. For many use cases, this capability is genuinely transformative.

But in intelligence agencies, law enforcement bodies, government regulatory institutions, and other regulated environments, answers alone are not enough.

In these settings, decisions are made under scrutiny, pressure, and consequence. They influence national security, public safety, regulatory enforcement, and institutional credibility. An answer is only useful if it can be trusted, reviewed, defended, and revisited as cases evolve.

This creates a fundamental mismatch.

AI systems optimised to sound confident may deliver responses quickly, but confidence without grounding introduces risk. A well-phrased output offers little value if the underlying reasoning cannot be examined, the sources cannot be verified, or the context cannot be reconstructed during audits, investigations, or legal review.

In intelligence work, the value of an answer depends entirely on how it was formed.

This principle applies equally to intelligence analysis, law enforcement investigations, and regulatory decision-making. Intelligence-grade capability is not defined by eloquence or responsiveness, but by its relationship to evidence.

Intelligence-grade AI must therefore support confidence through traceability, not persuasion through language.

That distinction sets the foundation for everything that follows.

Key Takeaways

- Intelligence-grade AI is defined by evidence, not fluent answers.

- In high-stakes environments, confidence without traceability introduces risk.

- Evidence-aware AI connects every insight to verifiable source material.

- Auditability is essential for decisions that face review, oversight, or scrutiny.

- Secure, context-preserving AI enables trust at operational speed.

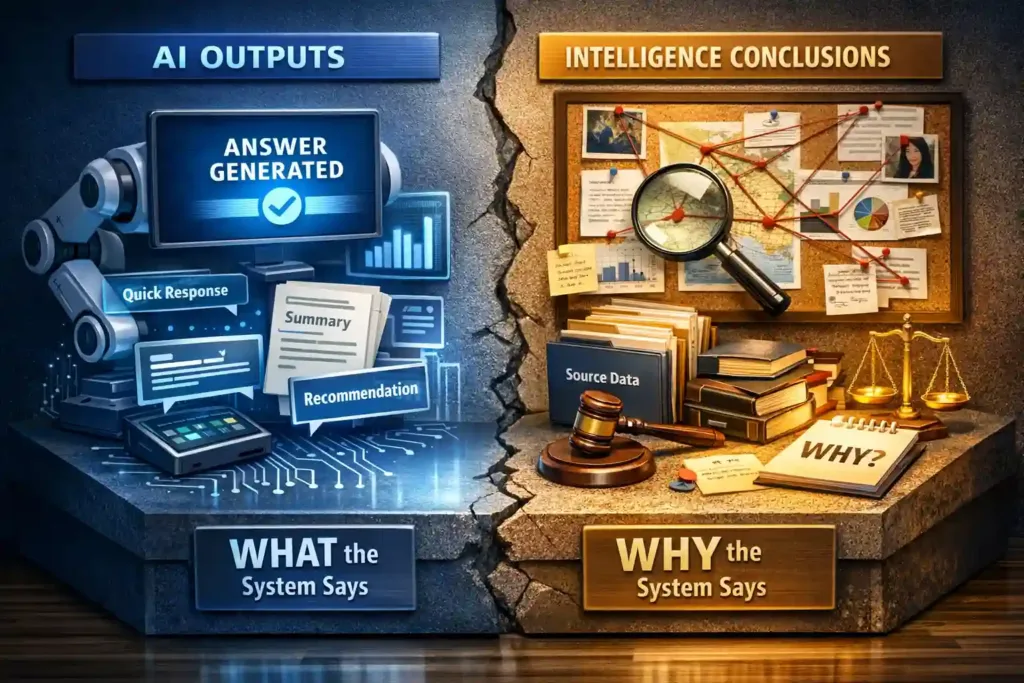

The Difference Between AI Outputs and Intelligence Conclusions

To understand what intelligence-grade AI must deliver, it is essential to distinguish between AI outputs and intelligence conclusions.

An AI output is a generated response. It answers a question, summarises content, or produces a recommendation based on learned patterns.

An intelligence conclusion, however, is something fundamentally different. It is a reasoned, defensible assessment, built from multiple inputs, grounded in source material, and shaped by context over time.

This distinction is especially important in intelligence agencies, law enforcement investigations, and government regulatory workflows, where conclusions must withstand review, challenge, and accountability.

AI outputs answer the question: “What does the system say?”

Intelligence conclusions must answer a deeper one: “Why does the system say this?”

That “why” is not academic. It determines whether analysts can validate an insight, whether investigators can justify a finding, and whether regulators can defend an action during oversight or judicial scrutiny.

Output-centric AI systems are designed to respond. They prioritise speed, fluency, and completeness of answers. Intelligence-grade systems, by contrast, must prioritise reasoning paths, source visibility, and contextual continuity across cases and time.

This marks a necessary shift.

From AI systems that generate responses, to intelligence systems that surface evidence.

From output-centric AI to evidence-aware intelligence systems.

Only by making this transition can AI move from being a convenience layer to becoming a trusted component of intelligence analysis, law enforcement investigation, and regulatory decision-making.

Why Fluent AI Can Create False Confidence

One of the most compelling qualities of modern GenAI systems is fluency.

They generate responses that are articulate, structured, and often persuasive. In many environments, this fluency is interpreted as intelligence. In intelligence agencies, law enforcement bodies, and regulatory institutions, that assumption can introduce risk.

Fluency does not equal correctness. And confidence does not equal defensibility.

A well-worded AI response may obscure uncertainty rather than resolve it. When answers are presented with clarity and authority, gaps in source coverage or reasoning paths are not always obvious. Over time, this can create an illusion of certainty, especially when decisions must be made under pressure.

In operational environments, this effect is amplified.

During time-sensitive investigations or regulatory actions, teams may rely heavily on AI-generated outputs to accelerate analysis. If those outputs lack clear links to underlying evidence, analysts are forced to trust the system first and verify later. That reversal introduces friction, not efficiency.

Fluent AI responses can also mask the absence of context. Without visibility into how information was weighted, which sources were prioritised, or what data was excluded, users may struggle to assess reliability. In environments that demand accountability, this lack of transparency can complicate review, oversight, and justification.

None of this implies that fluent AI is inherently flawed. But in high-stakes environments, fluency without evidence can be more dangerous than uncertainty.

Uncertainty invites investigation. False confidence may suppress it.

What “Evidence-Aware AI” Actually Means

If fluency alone is insufficient, what should intelligence-grade AI deliver instead?

The answer lies in evidence awareness.

Evidence-aware AI is not defined by how convincingly it responds, but by how clearly it connects insights to underlying information. It enables users to understand not just what an answer is, but why it exists.

This capability rests on three foundational requirements.

Source Traceability

In intelligence, law enforcement, and regulatory workflows, every conclusion must be defensible.

Evidence-aware AI ensures that insights can be traced back to their origins, whether those sources are documents, transcripts, records, structured data, or media inputs such as audio, images, and video.

Rather than producing isolated responses, the system maintains a visible link between conclusions and the information that informed them. This allows analysts to verify accuracy, assess relevance, and challenge assumptions when needed.

Traceability is not an enhancement. It’s a prerequisite for trust.

Context Preservation

Evidence rarely exists in isolation.

A document gains meaning from its timeline. A communication matters because of who was involved. An image or recording derives significance from the event surrounding it.

Evidence-aware AI must therefore preserve context across time and across interactions. This includes maintaining awareness of timelines, relationships, and historical relevance as analysis evolves.

When context is lost, insights must be repeatedly reconstructed. When context is preserved, intelligence accumulates instead of resetting.

For institutions that manage long-running investigations or regulatory cases, this distinction is critical.

Explainable Reasoning Paths

Finally, evidence-aware AI must support explainable reasoning.

This goes beyond surface-level explanations. It requires the ability to understand how an output was derived from information, what sources contributed, how they were interpreted, and how conclusions were formed.

Explainable reasoning paths enable analysts to review decisions, supervisors to validate findings, and institutions to remain accountable for actions informed by AI.

In intelligence-grade environments, explainability is not about transparency for curiosity. It is about accountability under scrutiny.

Together, these elements define evidence-aware AI. Not AI that merely answers questions, but AI that enables trust, validation, and defensible decision-making.

Auditability: The Non-Negotiable Requirement

In intelligence agencies, law enforcement bodies, and government regulatory institutions, decisions rarely end when they are made.

They are reviewed. They are questioned. They are revisited, sometimes months or years later.

Intelligence assessments may face internal reviews. Investigative conclusions may be examined in legal proceedings. Regulatory actions may be subject to policy oversight or judicial scrutiny. In these environments, the ability to explain after the fact is as important as the ability to decide in the moment.

This is where auditability becomes non-negotiable.

AI systems that produce outputs without a verifiable trail struggle in hindsight. A response that seemed clear at the time becomes difficult to justify when reviewers ask how it was formed, which information was considered, and what assumptions influenced the conclusion.

This is the fundamental limitation of black-box AI in intelligence-grade environments.

While many GenAI systems offer explainability demonstrations, high-level descriptions of how models work, these are not the same as real audit trails. Explaining a model’s architecture does not explain a specific decision. Intelligence operations require visibility into what information was used, how it was interpreted, and why a particular conclusion emerged.

Without this, accountability weakens.

Auditability ensures that intelligence outputs can withstand scrutiny without reconstruction. It allows institutions to stand behind decisions informed by AI, not as probabilistic suggestions, but as defensible assessments grounded in evidence.

This leads to a simple but critical standard: Intelligence-grade AI must survive hindsight.

If an AI-assisted decision cannot be reviewed, explained, and defended after the pressure has passed, it does not meet the operational requirements of intelligence, law enforcement, or regulatory environments.

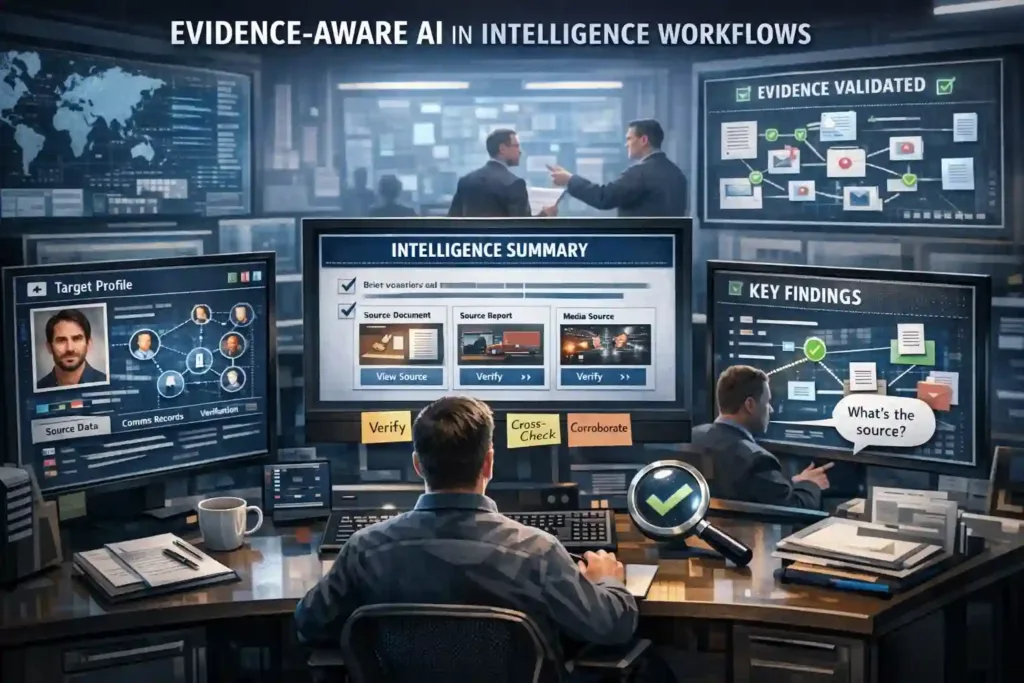

Evidence-Aware AI in Real Intelligence Workflows

The importance of evidence awareness becomes clearest when viewed through everyday intelligence workflows.

Consider the review of a summarized intelligence brief. Speed matters, but trust matters more. Analysts must be able to see not only the summary, but the underlying sources that informed it. Without that visibility, summaries become starting points for rework rather than tools for acceleration.

Or consider the validation of a profile built across multiple sources: documents, communications, records, and media. Investigators must confirm how relationships were identified, which data points were prioritised, and how conflicting information was handled. A profile that cannot be traced back to evidence becomes fragile under scrutiny.

The same applies when answering leadership questions under pressure. Decision-makers often need immediate clarity, but that clarity must be defensible. When follow-up questions arise, about sources, assumptions, or context, analysts must be able to respond with confidence, not conjecture.

Across these scenarios, a consistent principle applies.

Analysts must verify, not trust blindly. Supervisors must be able to defend conclusions, not reinterpret them. Institutions must retain accountability, not delegate it to opaque systems.

Evidence-aware AI supports this reality by embedding verification into the workflow itself. It enables intelligence professionals to move faster because trust is built in, not because caution is bypassed.

This is what separates AI that merely assists from AI that can be relied upon in environments where decisions carry lasting consequences.

What Intelligence-grade AI Must Deliver by Design

If AI is to be trusted in intelligence agencies, law enforcement bodies, and government regulatory institutions, its capabilities cannot be optional or layered on later. They must be built into the system by design.

An intelligence-grade AI system must deliver the following:

- Evidence-linked responses

Insights and answers must be directly connected to underlying source material, allowing users to trace conclusions back to documents, records, communications, or media inputs.

- Multi-format source grounding

Intelligence does not exist in text alone. AI must work across documents, audio, video, images, forms, and legacy archives, treating each as a first-class intelligence input rather than isolating them into separate workflows.

- Persistent context across sessions

Context should accumulate, not reset. Intelligence-grade AI must preserve timelines, relationships, and historical relevance across investigations and over time, ensuring continuity as cases evolve.

- Reviewable and auditable outputs

Every AI-assisted insight must be capable of withstanding review after the fact. Outputs should support validation, oversight, and accountability without requiring reconstruction under pressure.

- Secure deployment aligned with governance

AI must operate within established security boundaries and regulatory frameworks. On-premise, offline, and air-gapped deployment capabilities are not edge cases, they are core requirements in sensitive environments.

Together, these requirements define the threshold between AI that is merely impressive and AI that is operationally trustworthy.

They also explain why intelligence-grade AI looks fundamentally different from generic, consumer-oriented GenAI systems.

Intelligence is Built on Trust, Not Just Speed

Artificial intelligence will increasingly influence how decisions are made across intelligence, law enforcement, and regulatory environments.

But speed alone will not determine its value.

In these settings, trust is operational currency. Decisions must be made quickly, but they must also be defensible, reviewable, and grounded in evidence. AI that cannot support those standards may accelerate outputs, but it does not strengthen intelligence.

The future of AI in intelligence is not defined by novelty or fluency. It is defined by alignment with how intelligence actually works: under scrutiny, under pressure, and with lasting consequences.

Intelligence-grade AI is not defined by how fast it answers, but by how confidently those answers can be defended.

That principle is what separates experimentation from adoption, and convenience from capability. Its also the standard against which all intelligence AI will ultimately be measured.

FAQs – Frequently Asked Questions

1. What is intelligence-grade AI?

Intelligence-grade AI refers to AI systems designed for high-stakes environments where decisions must be defensible, auditable, and grounded in evidence, not just fast or fluent.

2. How is intelligence-grade AI different from generic GenAI tools?

Generic GenAI focuses on generating responses. Intelligence-grade AI prioritizes traceability, context preservation, auditability, and secure deployment within governed environments.

3. Why is evidence awareness critical in intelligence and regulatory settings?

Because decisions often face review, legal scrutiny, or oversight. AI outputs must be traceable back to source material to support validation and accountability.

4. What does “evidence-aware AI” mean in practice?

It means AI systems that link insights to underlying documents, records, media, and data sources, while preserving context, timelines, and reasoning paths.

5. Why can fluent AI answers be risky in high-stakes decisions?

Fluency can mask uncertainty. Without visible evidence paths, confident-sounding answers may create false assurance and increase verification burden later.

6. What is the difference between explainability and auditability?

Explainability describes how a model works in theory. Auditability enables review of specific AI-assisted decisions after the fact, with clear evidence trails.

7. Can intelligence-grade AI operate in secure or offline environments?

Yes. Intelligence-grade AI is typically designed to operate on-premise, offline, or in air-gapped environments to meet strict security and governance requirements.

8. Does intelligence-grade AI replace analysts or decision-makers?

No. It augments human expertise by reducing intelligence friction, while judgment, responsibility, and accountability remain with professionals.